The Big Question

State, federal, and international regulations mandate that any exposure of R&D trial plant materials to other planted crops (by accident or the whims of Mother Nature) be tracked, managed, mitigated, and reported.

This protocol is known as “Trait Exposure.”

The legacy approach was limited by a reliance on spreadsheets. How can we make this process more efficient, collaborative, and accurate?

Context

Kickoff & Alignment

IT’S A TRAP TO JUMP RIGHT INTO RESEARCH WITHOUT ENSURING EVERYONE IS ON THE SAME PAGE.

The responsibility for managing and reporting these exposures of regulated trail crop materials to standard, unregulated plantings was called Trait Quality. Its stakeholder team comprised members from three different large business functions within the Crop Science business.

The Project Manager reported that making forward progress on this well-known exposure issue was difficult because of lack of direction and mixed, unarticulated assumptions. UX Research may be able to help. It was time to heard some cats.

Stakeholder Kickoff & The FIve Whys

After a kickoff conversation with the PM, I began with stakeholder interviews to understand where the team was aligned, where there was daylight, and where there were assumptions in the team’s thinking. From there, I organized a formal kickoff meeting with the core team and used the information from the interviews to conduct a Five Whys exercise:

Uncovering the roots of the stakeholders’ requests will help align the team and move our goals from outputs to outcomes.

- “We want a new tool.”

- “Everyone hates using the existing legacy in-house and vendor tools.”

- “Those tools don’t fully support our work and are awful to use.”

- “That’s what we hear from our direct reports.”

- “They want a tool that supports their work without workarounds – and can be trusted for data reliability.”

With that, the team started to understand the real need.

Activities & Deliverables

- Stakeholder Interviews

- Kickoff Workshop & Assumption Mapping

- Project Brief

Research

Our primary research need was to document the current state of exposure processes and tools across five different business functions, understand how and why processes differed, and to evaluate the quality of current tools in use.

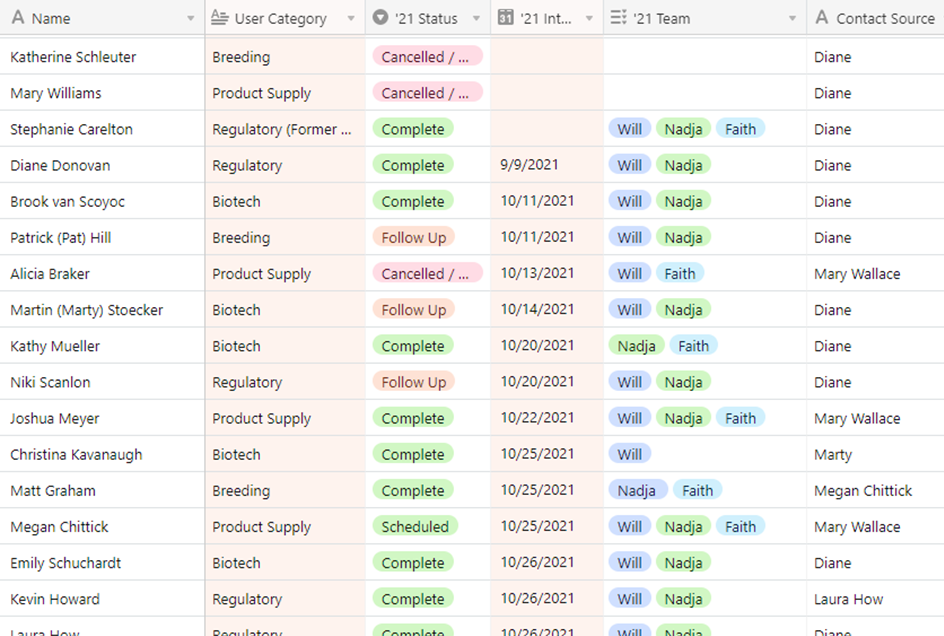

User Interviews / Contextual Inquiries

In-depth user interviews that combined aspects of remote contextual inquiries were our primary approach to answering this question.

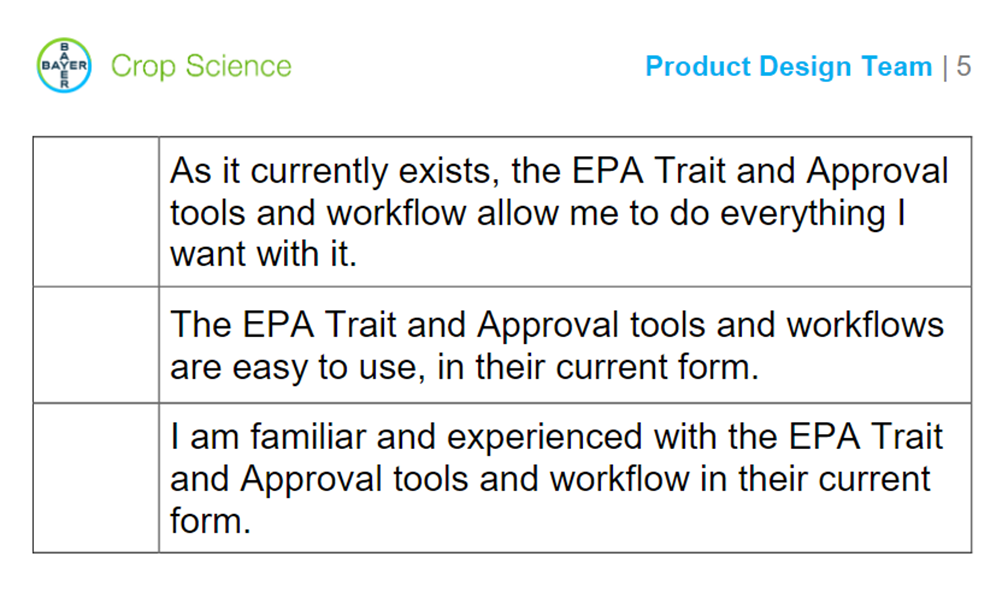

UMUX-Lite Surveys

We lacked the time in the schedule to do both qualitative research and quantitative benchmarking. While we prioritized “why” over “what,” we used a modified UMUX-Lite survey to benchmark user sentiments towards the existing tool & workflow:

- 7-point Likert scale

- Additional “power user” question included

- Used top and bottom-2 box analysis of the power user question to filter the value and ease of use questions

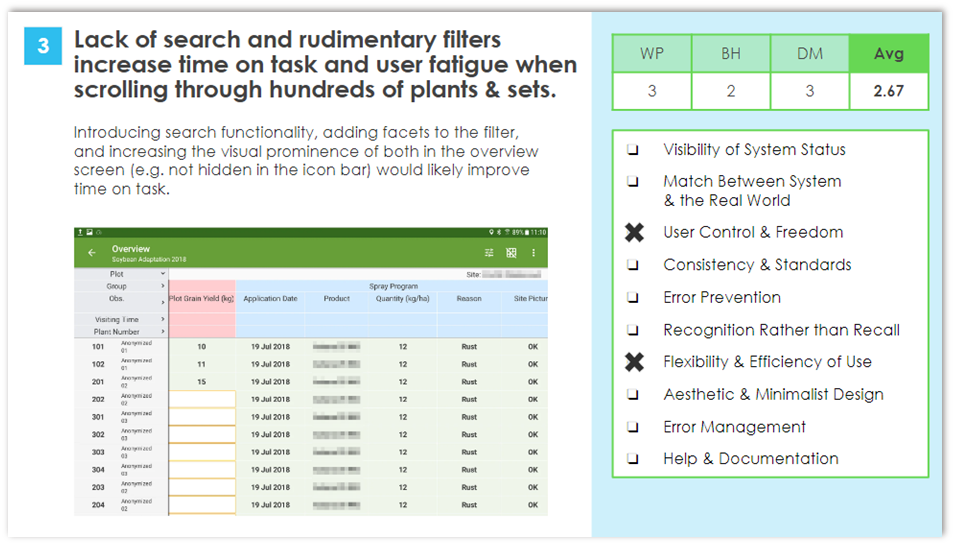

Heuristic Analysis

As we uncovered the steps and tools currently used to manage Trait Exposure grew (so many Excel files emailed between people!), it was then important to understand the tools ourselves.

With the help of the product designers, we conducted heuristic analyses of the existing tools, documenting the information architecture and data models as we went along.

Overall Research Activities & Deliverables

- Hybrid user interviews / remote contextual inquiries

- UMUX-Lite derived user surveys

- Heuristic reviews of existing tools

- Content documentation & analysis

Analytics platforms or behavioral metrics(unavailable for this project)

Analysis

The Work isn’t complete just because your user interviews are done.

Though not diverse in research methods, we documented a significant amount of data which exposed how risky and ad hoc current processes really were. Much of this was new information to stakeholders. The analysis phase was critical to building our strategic understanding of the problem.

Analysis Activities & Deliverables

- Thematic analysis of IDIs

- Current state journey mapping

- User segmentation and empathy mapping

Strategy

We did the research. Now what do we do about it?

Scientists and compliance specialists were doing their jobs the most efficient way they could – with workarounds. Now that we understood their pain points and what the business required, what would a better set of Exposure management tools look like?

I facilitated a pair of post-research readout workshops with the core team to revisit our goals, ideate around possible solutions, and create a product canvas.

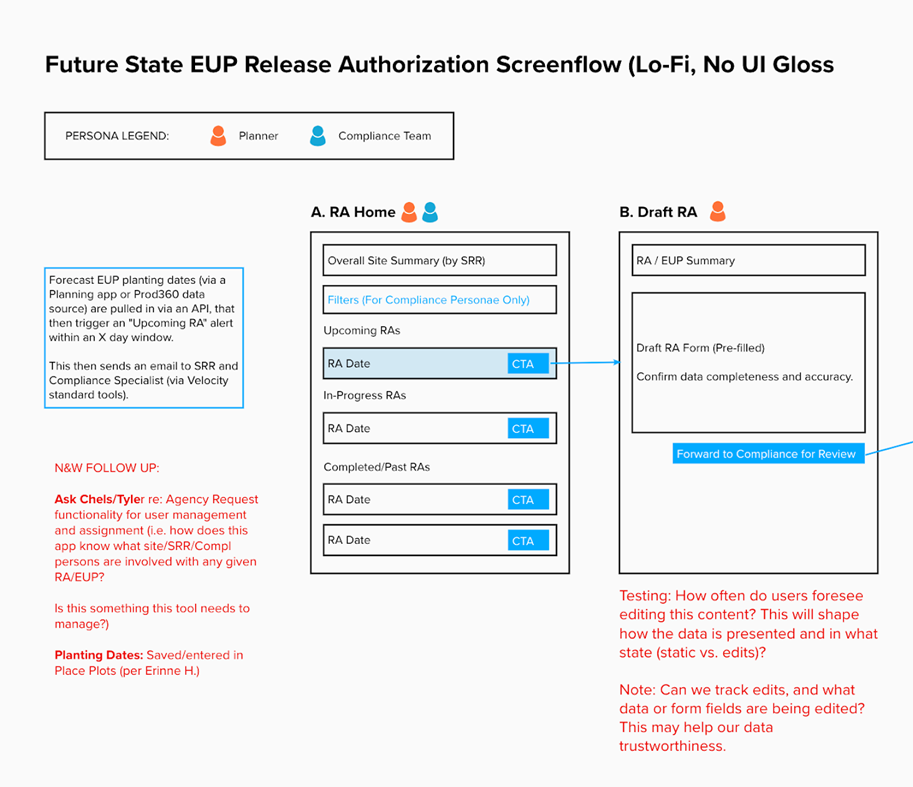

Lo-Fi Wires

With an clearer, aligned understanding the direction and strategy of the new Trait Exposure tool, we were able to start putting structure around its future state.

This included screenflows and very low-fidelity wireframes as organizational aids for the product designer. (This was, in fact, the first time I’d had a chance to revisit these classic forms of UX artifacts in some years!)

Strategy Activities & Deliverables

- Cross-Functional Ideation Workshop

- Revisiting Definitions of Success & Metrics

- Product Canvas

- Future State Journey Mapping

- Defining Jobs-to-be-Done and Assigning them to Jiro Epics

- Lo-Fi Screenflows and Wireframes

Regarding these design artifacts: given changes in vendors / platforms, I no longer had access to the product canvas and JTBD by the time I left Bayer Crop Science.

Testing & Validation

With Discovery complete, the product team took an iterative approach to UI/IxD design. In my role, my testing protocol consisted of three, increasingly granular methods that allowed us to refine designs at each step:

Validation Cycles:

- Itemized Heuristic Reviews of the Designer’s Figma Mockup

- Moderated, Qualitative-Focused Usability Tests (Small Groups)

- Quantitative Usability Tests (Larger Groups, Measuring Success Scales and Time on Task)